Check out our latest products

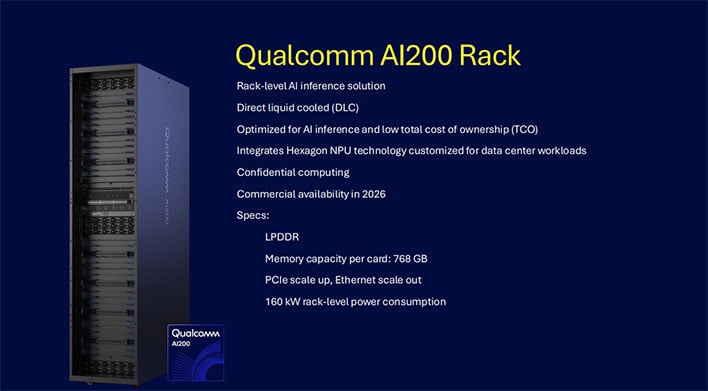

What that boils down to for the AI200 is support for up to 768GB of LPDDR per card to enable exceptional scale and flexibility for AI inference. Qualcomm’s AI200 rack also integrates a hexagonal NPU and, as a whole, is is direct liquid cooled (DLC).

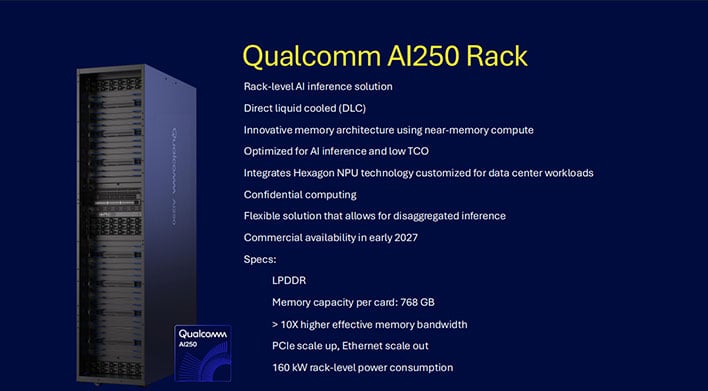

Meanwhile, the AI250 offers the same 768GB memory capacity per card while also introducing what Qualcomm says is an innovative memory architecture based on near-memory computing for a generational leap in efficiency and performance for AI inference workloads. According to Qualcomm, it delivers more than 10x higher effective memory bandwidth, and at a much lower power consumption.

“With Qualcomm AI200 and AI250, we’re redefining what’s possible for rack-scale AI

inference. These innovative new AI infrastructure solutions empower customers to deploy

generative AI at unprecedented TCO, while maintaining the flexibility and security modern data

centers demand,” said Durga Malladi, SVP & GM, Technology Planning, Edge Solutions & Data

Center, Qualcomm Technologies, Inc.

“Our rich software stack and open ecosystem support

make it easier than ever for developers and enterprises to integrate, manage, and scale already

trained AI models on our optimized AI inference solutions. With seamless compatibility for leading AI frameworks and one-click model deployment, Qualcomm AI200 and AI250 are

designed for frictionless adoption and rapid innovation,” Malladi added.

![[Upgraded] USB Computer /Laptop Speaker with Stereo Sound & Enhanced Bass, Portable Mini Sound Bar for Windows PCs, Desktop Computer and Laptops](https://m.media-amazon.com/images/I/61NAayKmVIL._AC_SL1500_.jpg)